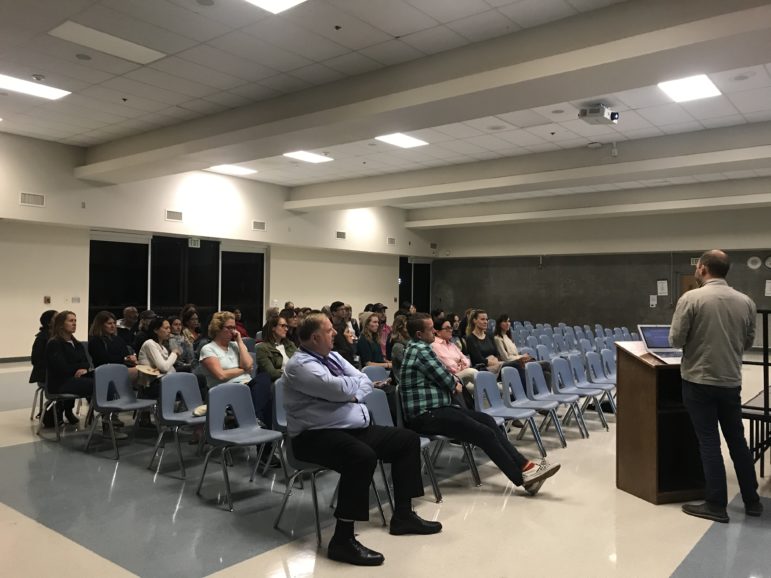

The parent education presentation —How to Meme Responsibly — drew some 30 attendees to the multipurpose room of Piedmont Middle School November 12, a gathering that included students, parents, members of the city council and school board, representatives of Piedmont’s Appreciating Diversity Committee, as well as the principals of Millennium High School (MHS), Piedmont High School (PHS), and Piedmont Middle School (PMS).

Prompted by the discovery in September of anti-Semitic memes on the phones of a few students at MHS, and an October report of a homophobic hate incident on Instagram involving PHS students, MHS principal Shannon Fierro and PMS math teacher Karen Bloom, who is the No Place for Hate coordinator for PMS, organized the meeting.

While there are still more questions than answers surrounding student uses of social media in hateful ways, presenters at the meeting shed important light on the topic – including the warning that seemingly humorous memes are a prime way of desensitizing young people to hateful ideologies.

Seemingly humorous memes are a prime way of desensitizing young people to hateful ideologies

PHS principal Adam Littlefield opened the evening by sounding a note of frustration: “We’re being challenged by this whole idea of social media.”

Fierro reflected on how different the world of information is for youth today versus when she was a high school student not long ago. “The sphere of influence and impact was relatively small,” she said. “Now if any young person has a bad idea, they can disseminate it to hundreds or thousands of people instantly. They also have access to all the bad ideas from the whole planet.”

A fundamental problem discussed at the meeting is that many parents do not participate in social media, do not understand how it works, and are unable to monitor their children’s activities or give advice.

Many parents do not participate in social media, do not understand how it works, and are unable to monitor their children’s activities or give advice

PMS technology coordinator Adam Saville opened his talk on meme culture with a point inside of a question. Saville asked the audience if anyone knew who “Pepe” was. Just a few students responded.

Originally a comic in The Boy’s Club created by artist Matt Furie in 2005, Pepe the Frog has since been turned from a fun character into a racist symbol by alt-right activists and other white supremacists. Furie denounced Pepe’s new symbolism and launched a campaign to reclaim Pepe’s innocence. But Pepe now appears on the ADL’s Hate Symbols Database among 213 other known hate symbols.

The point is that once harmless images can take on menacing new meanings. Saville said that the more outrageous memes are most likely to be shared, and more often than not these are ones with an insidious message. “Memes can easily veer towards misogyny, racism, and anti-Semitism. A meme that produces high arousal emotion, whether negative or positive, tends to be shared with greater frequency,” he said.

Vlad Khaykin, ADL associate regional director, led the remainder of the evening by giving a deeper look at online extremism and how it persists.

In California hate is on the rise. Anti-Semitic hate crimes increased by 21 percent between 2017 and 2018, and anti-Latino hate crimes increased by 18 percent, according to the LA Times. The FBI’s most recent hate crime statistics report a total of 7,120 hate incidents in 2017. 4,047 of those had race or ethnicity as a motivation and 1,419 had a religious -motivation. Of those, almost 60 percent were anti-Semitic.

The challenge that monitors of racist and hateful online speech face is an ever-changing flood of content that can adapt to any format and can morph in ways that are continually evolving and difficult to decode. “Literally anything can become a meme to deliver white nationalist ideology,” said Khaykin.

Tech companies aren’t doing more to stop online hate speech and racist activism, Khaykin argued, because of three reasons: profit motive, scale, and unavailable resources.

Tech companies aren’t doing more to stop online hate speech and racist activism because of three reasons: profit motive, scale, and unavailable resources

The more Internet users there are, the more money is being generated for those companies and the advertising companies they partner with. The immense scale on which online material is produced and posted makes it almost impossible to monitor.

Some 500 hours of video are uploaded to Youtube every minute. While there are around 2.4 billion Facebook users, there are only 30,000 Facebook employees assigned to safety and security and only half of those are content moderators, according to The Verge. The technology needed to process the data to block out bigotry does not exist yet.

A recent ADL survey found that 80 percent of Americans believe that tech companies aren’t doing enough to counter online hate speech. And more than 80 percent want the government to strengthen anti-hate laws and train law enforcement to deal with toxic online content.

Until then, social media users are left to fend for themselves and are subject to the whims of content creators. 30 to 40 percent of Americans are victims of severe online harassment, with youth at the highest exposure and risk.

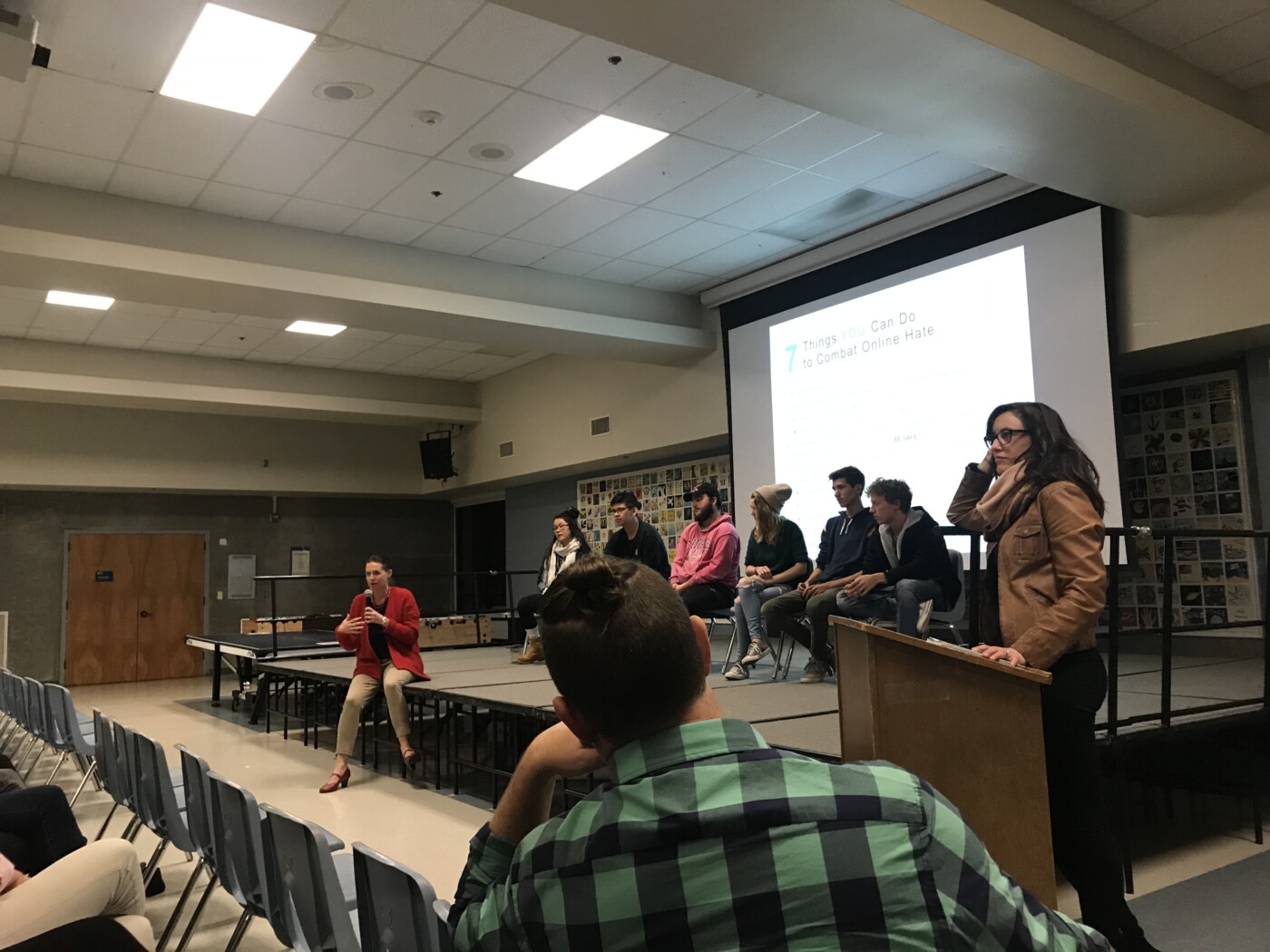

A panel of five PHS juniors and one MHS junior questioned by principal Fierro revealed that students are much quicker to pick up on humor in memes than they are on toxic messages, even when the two are side by side.

“Memes connect me with other people,” said one student. “They’re inside jokes,” said another. “They’re instant happiness” said a third.

When Fierro asked the students to give the audience advice on how to navigate meme culture, students agreed that education on understanding memes should start in middle school. One student asked for parents to trust that their children are not as susceptible to dangerous and racist ideologies than they might think.

There was no discussion about consequences for students who disseminate toxic online content in and around schools.

The ADL recommends these steps to combat online hate:

- Talk to young people about their online experiences and the threats targeting them

- Be aware of the content your kids access online

- Disrupt spread of anti-Semitic, racist, white nationalist, and other bigoted ideas offline and online

- Learn the terms of service of the platforms you and your kids use and report violations to the tech companies and the ADL

- Call on lawmakers to address online hate